Difference between revisions of "Online Monitoring Shift"

| Line 13: | Line 13: | ||

=== Starting and stopping the Monitoring System === | === Starting and stopping the Monitoring System === | ||

| − | The monitoring system should be automatically started and stopped by the DAQ system whenever a new run is started or ended (see [[Data Acquisition Shift | Data Acquisition]] for details on how to do that.) Shift workers may start or stop the monitoring system by hand if needed. This should be done from the ''hdops'' account by running either the ''start_monitoring'' or ''stop_monitoring'' script. One can also do it via buttons on the ''hdmongui.py'' program (see Fig. 2.) | + | The monitoring system should be automatically started and stopped by the DAQ system whenever a new run is started or ended (see [[Data Acquisition Shift | Data Acquisition]] for details on how to do that.) Shift workers will usually only need to start the RootSpy interface [[#Viewing Monitoring Histograms|described in the following section]]. Shift workers may also start or stop the monitoring system itself by hand if needed. This should be done from the ''hdops'' account by running either the ''start_monitoring'' or ''stop_monitoring'' script. One can also do it via buttons on the ''hdmongui.py'' program (see Fig. 2.) |

These scripts may be run from any gluon computer since they will automatically launch multiple programs on the appropriate computer nodes. If processes are already running on the nodes then new ones are not started so it is safe to run ''start_monitoring'' multiple times. To check the status of the monitoring system run the ''hdmongui.py'' program as shown in Fig. 2. A summary is given in the following table: | These scripts may be run from any gluon computer since they will automatically launch multiple programs on the appropriate computer nodes. If processes are already running on the nodes then new ones are not started so it is safe to run ''start_monitoring'' multiple times. To check the status of the monitoring system run the ''hdmongui.py'' program as shown in Fig. 2. A summary is given in the following table: | ||

| Line 34: | Line 34: | ||

=== Viewing Monitoring Histograms === | === Viewing Monitoring Histograms === | ||

| − | Live histograms may be viewed using the ''RootSpy'' program. Start it from the ''hdops'' account on any gluon node. It will communicate with all histogram producer programs on the network and start cycling through a subset of them for shift workers to monitor. Users can turn off the automatic cycling and select different histograms to display using the GUI itself. | + | Live histograms may be viewed using the ''RootSpy'' program. Start it from the ''hdops'' account on any gluon node via the ''start_rootspy'' wrapper script. It will communicate with all histogram producer programs on the network and start cycling through a subset of them for shift workers to monitor. Users can turn off the automatic cycling and select different histograms to display using the GUI itself. |

| + | |||

| + | '''Viewing Reference Plots''': Under normal operations, pages displayed by RootSpy are generated by macros. These may contain multiple histograms. Shift workers should monitor these by comparing to a reference plot. To see the reference plot, press the "Show Reference Plot" button at the bottom of the RootSpy main window. This will open a separate window that will display a static image of the reference plot by which to compare. This window will update automatically as RootSpy rotates through its displays. Therefore, the reference plot window can (and should) be left open and near the RootSpy window for shift workers to monitor. For information on updating a specific reference plot, see [[#Reference Plots|the section on Reference Plots]] below. | ||

'''Resetting Histograms''': The RootSpy GUI has a pair of buttons labeled ''Reset'' and ''Un-reset''. The first will reset the local copies of all histograms displayed in all pads of the current canvas. This does *not* affect the histograms in the monitoring processes and therefore has no affect on the archive ROOT file. What this actually does is save a copy in memory of the existing histogram(s) and subtracts them from what it receives from the producers before displaying them as the run progresses. This feature allows one to periodically reset any display without stopping the program or disrupting the archive. "Un-reset"-ing just deletes the copies, allowing one to return to viewing the full statistics. | '''Resetting Histograms''': The RootSpy GUI has a pair of buttons labeled ''Reset'' and ''Un-reset''. The first will reset the local copies of all histograms displayed in all pads of the current canvas. This does *not* affect the histograms in the monitoring processes and therefore has no affect on the archive ROOT file. What this actually does is save a copy in memory of the existing histogram(s) and subtracts them from what it receives from the producers before displaying them as the run progresses. This feature allows one to periodically reset any display without stopping the program or disrupting the archive. "Un-reset"-ing just deletes the copies, allowing one to return to viewing the full statistics. | ||

| Line 43: | Line 45: | ||

! Action | ! Action | ||

|- | |- | ||

| − | | ''' | + | | '''start_rootspy''' || Starts RootSpy GUI for viewing live monitoring histograms |

|- | |- | ||

|} | |} | ||

| + | |||

| + | |||

| + | === Reference Plots === | ||

| + | Detector experts are ultimately responsible for ensuring the currently installed reference plots are correct. The | ||

| + | plots are stored in the directory pointed to by the ROOTSPY_REF_DIR environment variable. This is set in the | ||

| + | /gluex/etc/hdonline.cshrc file (normally set to ''/gluex/data/REFERENCE_PLOTS''). One can always see the full | ||

| + | path to the currently displayed reference plot at the top of the ''RootSpy Reference Plot'' window along with | ||

| + | the modification date/time of the file. | ||

| + | |||

| + | The recommended way of updating a reference plot is via the RootSpy main window itself. At the bottom of the | ||

| + | window is a button "Make this new Reference Plot". This will capture the current canvas as an image and save | ||

| + | it to the appropriate directory with the appropriate name. Moreover, it will first move any existing reference | ||

| + | plot to an archive directory prefixed with the current date and time. This will serve as an archive of when each | ||

| + | reference plot was retired. | ||

| + | |||

Revision as of 11:37, 26 January 2017

Contents

The Online Monitoring System

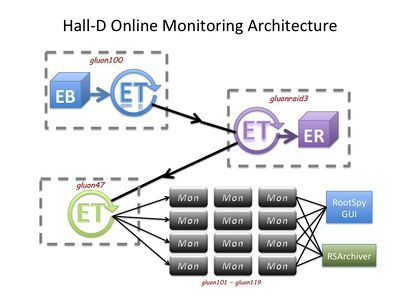

The Online Monitoring System is a software system that couples with the Data Acquisition System to monitor the quality of the data as it is read in. The system is responsible for ensuring that the detector systems are producing data of sufficient quality that a successful analysis of the data in the offline is likely and capable of producing a physics result. The system itself does not contain alarms or checks on the data. Rather, it supplies histograms and relies on shift takers to periodically inspect them to assure all detectors are functioning properly.

Events will be transported across the network via the ET (Event Transfer) system developed and used as part of the DAQ architecture. The configuration of the processes and nodes are shown in Fig. 1.

Routine Operation

Starting and stopping the Monitoring System

The monitoring system should be automatically started and stopped by the DAQ system whenever a new run is started or ended (see Data Acquisition for details on how to do that.) Shift workers will usually only need to start the RootSpy interface described in the following section. Shift workers may also start or stop the monitoring system itself by hand if needed. This should be done from the hdops account by running either the start_monitoring or stop_monitoring script. One can also do it via buttons on the hdmongui.py program (see Fig. 2.)

These scripts may be run from any gluon computer since they will automatically launch multiple programs on the appropriate computer nodes. If processes are already running on the nodes then new ones are not started so it is safe to run start_monitoring multiple times. To check the status of the monitoring system run the hdmongui.py program as shown in Fig. 2. A summary is given in the following table:

| Program | Action |

|---|---|

| start_monitoring | Starts all programs required for the the online monitoring system. |

| stop_monitoring | Stops all monitoring processes |

| hdmongui.py | Starts graphical interface for monitoring the Online Monitoring system |

| start_hdview2 | Starts graphical event viewer with the correct parameters to connect to current run |

Viewing Monitoring Histograms

Live histograms may be viewed using the RootSpy program. Start it from the hdops account on any gluon node via the start_rootspy wrapper script. It will communicate with all histogram producer programs on the network and start cycling through a subset of them for shift workers to monitor. Users can turn off the automatic cycling and select different histograms to display using the GUI itself.

Viewing Reference Plots: Under normal operations, pages displayed by RootSpy are generated by macros. These may contain multiple histograms. Shift workers should monitor these by comparing to a reference plot. To see the reference plot, press the "Show Reference Plot" button at the bottom of the RootSpy main window. This will open a separate window that will display a static image of the reference plot by which to compare. This window will update automatically as RootSpy rotates through its displays. Therefore, the reference plot window can (and should) be left open and near the RootSpy window for shift workers to monitor. For information on updating a specific reference plot, see the section on Reference Plots below.

Resetting Histograms: The RootSpy GUI has a pair of buttons labeled Reset and Un-reset. The first will reset the local copies of all histograms displayed in all pads of the current canvas. This does *not* affect the histograms in the monitoring processes and therefore has no affect on the archive ROOT file. What this actually does is save a copy in memory of the existing histogram(s) and subtracts them from what it receives from the producers before displaying them as the run progresses. This feature allows one to periodically reset any display without stopping the program or disrupting the archive. "Un-reset"-ing just deletes the copies, allowing one to return to viewing the full statistics.

| Program | Action |

|---|---|

| start_rootspy | Starts RootSpy GUI for viewing live monitoring histograms |

Reference Plots

Detector experts are ultimately responsible for ensuring the currently installed reference plots are correct. The plots are stored in the directory pointed to by the ROOTSPY_REF_DIR environment variable. This is set in the /gluex/etc/hdonline.cshrc file (normally set to /gluex/data/REFERENCE_PLOTS). One can always see the full path to the currently displayed reference plot at the top of the RootSpy Reference Plot window along with the modification date/time of the file.

The recommended way of updating a reference plot is via the RootSpy main window itself. At the bottom of the window is a button "Make this new Reference Plot". This will capture the current canvas as an image and save it to the appropriate directory with the appropriate name. Moreover, it will first move any existing reference plot to an archive directory prefixed with the current date and time. This will serve as an archive of when each reference plot was retired.

Expert personnel

Expert details on the Online Monitoring system can be here. The individuals responsible for the Online Monitoring are shown in following table. Problems with normal operation of the Online Monitoring should be referred to those individuals and any changes to their settings must be approved by them. Additional experts may be trained by the system owner and their name and date added to this table.

| Name | Extension | Date of qualification |

|---|---|---|

| David Lawrence | 269-5567 | May 28, 2014 |