HOWTO Execute a Launch using PSC

Here is some information on using the Bridges system at the Pittsburgh Supercomputing Center (PSC) to process raw data from GlueX.

Note that detailed documentation is available at the PSC website here: https://www.psc.edu/bridges/user-guide

Contents

Quick Start

- Ensure automatic ssh login is working from the gxproj4 on ifarm1901 (details below)

- Ensure the Globus credentials are setup to use your account (details below)

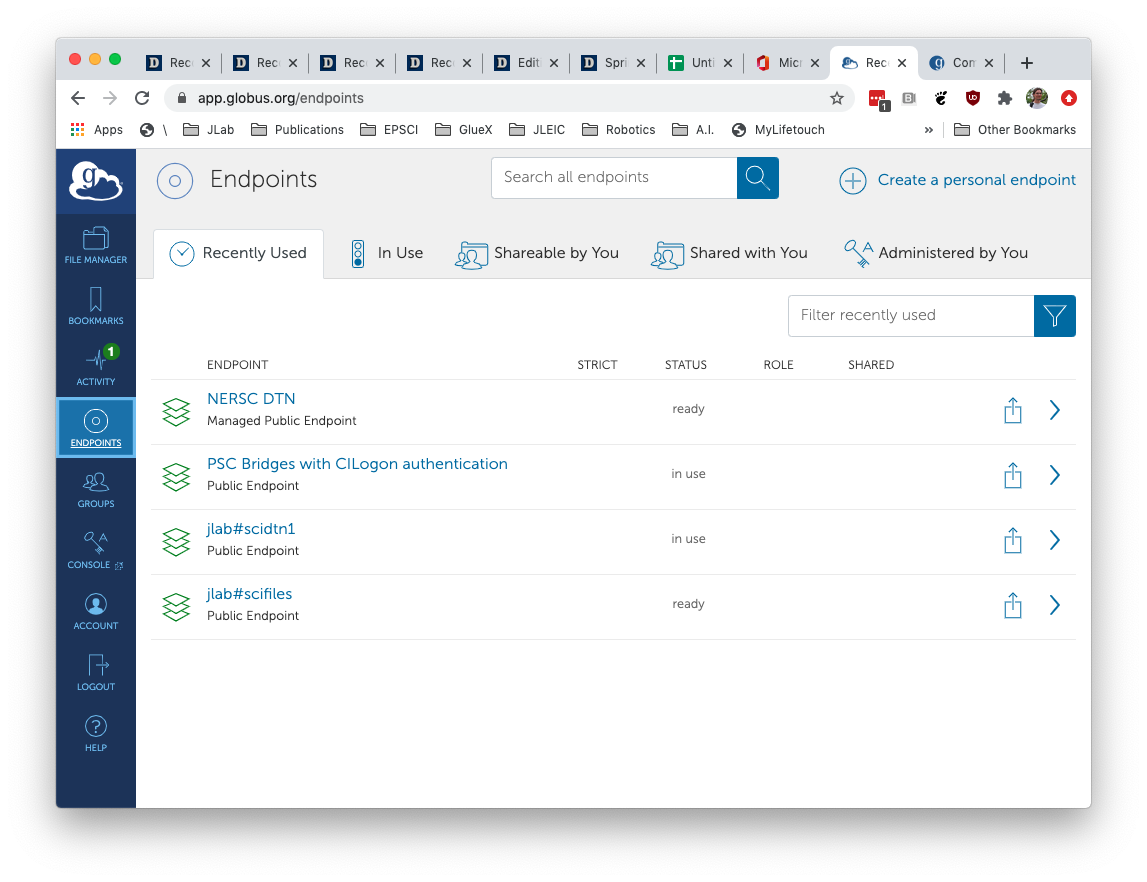

- Ensure all 3 endpoints on Globus are authenticated: PSC Bridges with CILogon authentication, jlab#scidtn1, and jlab#scifiles

- Create a directory under ~/PSC (e.g. ~gxproj4/PSC/2020.08.21.recon_2020-11_ver01_batch02)

- Go to working directory above and checkout launch scripts and status plot scripts:

- Go to launch directory and edit the launch_psc.py script

- Make sure TESTMODE is set to True

- Modify configuration options at top of script. It is strongly recommended that you run a small test job first with the output written to mss:/mss/halld/halld-scratch/...

- Run the script with TESTMODE True and carefully look through the output. Verify that everything looks correct.

- Edit the jana_recon_nersc.config file to modify the CCDB timestamp and anything else that needs to be changed.

- Yes, we use the same config file for NERSC and PSC. That is not a typo on the preceding line.

- commit changes to jana_recon_nersc.config

- Log into your account on bridges and checkout the launch directory to an appropriate place

- Make a directory like ~/work/2020.08.25.recon_2020-11_ver01_batch02

- cd to above directory and do svn co https://halldsvn.jlab.org/repos/trunk/scripts/monitoring/launch

- Make note of this directory and modify the launch_psc.py script AT JLAB so the LAUNCHDIR points to it

- Double check everything and if it all seems right, modify the TESTMODE variable in the launch_psc.py script at JLab and run it.

Quick start on webpage with updating plots

- Go to the working directory created in step 4 above (e.g. ~gxproj4/PSC/2020.08.21.recon_2020-11_ver01_batch02)

- Checkout the source code

- Create a directory in the web server directory, but using gxproj5. E.g.:

- ssh gxproj5@1farm1901

- cd /group/halld/www/halldweb/html/data_monitoring/recon

- mkdir summary_swif2_output_recon_2019-11_ver01_batch02_PSC

- Modify the main index.html for the launch_analysis page. (You can do this as gxproj4)

- emacs /group/halld/www/halldweb/html/data_monitoring/launch_analysis/index.html

- Add an appropriate link to the directory created in the previous step

- Go back to the hdswif2 directory and:

- modify the auto_run.sh file to reflect the directory created above.

- modify the regenerate_plots.csh file to reflect start time when the launch_psc.py script was run

- modify the index.html file to reflect the correct title. It is also recommended to cut and paste the last part of the output from when the launch_psc.py script was run to the bottom of the index.html file.

- Run the auto_run.sh script. This program will stay in the foreground so make sure to do it in a window that can stay up.

Troubleshooting

If something stops working, here are some things to check:

- Globus endpoints expire. Make sure to keep them authenticated

- The ssh agent could die. Especially if ifarm1901 is rebooted. Check this by doing an ssh from the gxproj4 account to bridges and verify it does not require a password.

- Plots on web page don't update:

- When auto_run.sh is first run, it will look generate a json file with information about runs from swif2. If the file exists, it will read it and assume it is up to date. If you re-run launch_psc.py to add more runs to an existing workflow, you should remove the json file and re-start auto_run.sh

- The start date/time in regenerate_plots.csh maybe off. File contains start_date and plot_start variables. The system is much more complicated than it needs to be due to NERSC being 3 hours earlier than Virginia. For PSC, you'll need to set plot_start to be 3 hours earlier than start_date.

- Jobs stall:

- If you've checked all of the authentications are up to date and just can't see what is causing the issue, contact Chris L. and ask him to take a look. Occasionally the swif2 engine needs to be reset and only he can do it.

Automatic Login

Bridges Documentation: https://www.psc.edu/bridges/user-guide/connecting-to-bridges

Note that at this time, running production jobs at PSC from the gxproj4 account requires use of your personal account at Bridges. I.e. we do not have a group account set up there.

You first need to make sure you have an account on bridges and can log in via ssh from JLab. See the documentation at the above link for that.

In order to support the type of automatic logins required for SWIF2, a ssh key pair must be installed on the bridges system for your account. This process involves submitting the key-pair for approval so that they can be installed in your account. You can do this via the page below. You should generate a dedicated key pair for this purpose and not use your standard one.

https://grants.psc.edu/cgi-bin/ssh/listKeys.pl

Install the key pair in the .ssh directory of the gxproj4 account (as well as your home .ssh directory for convenience). Modify the .ssh/config file in the gxproj4 account to use this key when connecting to bridges. For example, here is what the settings are for using my key to get into my account on bridges.

Host *.psc.edu IdentityFile ~/.ssh/id_rsa_IU User davidl

In order for swif2 to log in without being challenged for a password an ssh-agent will need to be running on the host swif2 runs from. The ~/.cshrc file for the gxproj4 account has been set up to check for a file named ~/PSC/ssh_agent_<hostname> and to source it if found. This will setup the environment to use the agent running on the local machine. It is important that this be running on the machine swif2 is running on. At the time of this writing, SWIF2 IS USING ifarm1901.jlab.org. Note that a weakness of this system is that it does not detect if the agent is actually running. I have had agents running for months so you shouldn't need to restart it very often.

To start a new agent and create the environment setup file for it do this (the key given to ssh-add should be yours):

ssh-agent > ${HOME}/PSC/ssh_agent_${HOST} source ${HOME}/PSC/ssh_agent_${HOST} ssh-add ${HOME}/.ssh/id_rsa_IU

Finally:

ssh bridges.psc.edu

INTERACTIVE FARM NODE

To get an interactive node run the following:

interact --egress

The "--egress" option says to allow the node to interact with external

networks.

Globus

The files are transferred to/from PSC using Globus. In order for this to work, you need 4 points of authentication set up. The first is in the gxproj4 account so swif2 can issue globus commands from there. The other 3 are endpoints that must be periodically re-authorized through the Globus web interface using your Globus account.

WARNING: The globus credentials setup for the gxproj4 account are used for both PSC and NERSC

The globus command line interface is installed on mssdb17 and is run by swif2 there. Swif2 runs them automatically from the gxproj4 account (assuming that is where the workflow was started from). To check who the credentials are set up for, use do this:

> ssh gxproj4@ifarm1901 > ssh mssdb17 > globus whoami

If you need to change the globus account being used, the you'll need to issue a "globus logout" followed by a "globus login". See the following webpage for details.

Globus Commands: https://docs.globus.org/cli/reference/

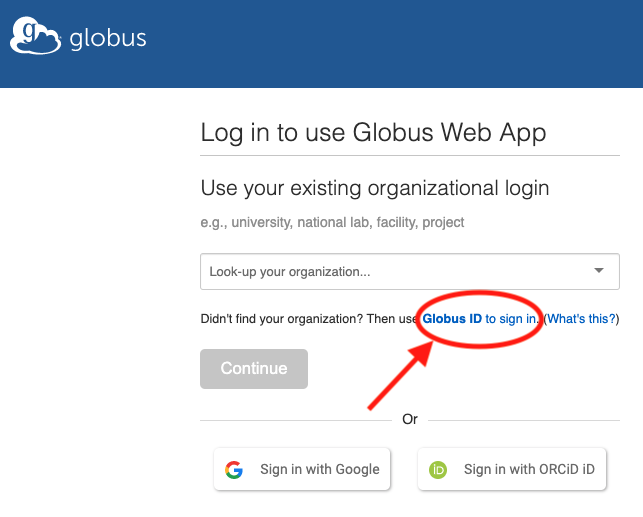

You will need your own Globus account if you don't have one already. Note that you will not be able to use your Google account for this. At the time of this writing, I use the "Globus ID" sign in option shown here. Be sure to use your Globus username/password and not your CUE username/password.

Endpoint Authentication

Once you have logged into the globus webpage, click the "Endpoints" link on the left hand side. For PSC, you will need to have 3 endpoints authenticated. These are PSC Bridges with CILogon authentication, jlab#scidtn1, and jlab#scifiles. The last two are used to transfer files from and to JLab respectively. Both must be active for jobs to complete. This should look something like the image below. Note that the expiration times for each of these is different. With their current limits, you will need to re-authenticate one endpoint at least every 6 days.

Storage

The primary storage area at bridges is "pylon5". Our project's directory is:

/pylon5/ph5pi4p

Storage is important since it determines the value of the maximum concurrent jobs that should be set for the swif2 workflow. The rule of thumb is:

MaxJobs = (available space)/27GB

The 27GB is 20GB for the raw data file and 7GB for the output products. Thus, if we are allocated 20TB of space, we can have a maximum of 740 concurrent jobs.